A/B testing is one of the most effective ways to improve your website’s conversion rate. But understanding what to do when A/B test fails is equally crucial for optimization success.

Most businesses assume a failed A/B test means wasted time. That’s a mistake. In reality, these “failed” tests often hold more value than winning ones—if you know how to analyze them properly.

A study by VWO found that only 1 in 7 A/B tests lead to a winning result. That’s just 14%.

AppSumo also reported similar findings. According to them, only 1 in 8 of their tests drove a significant change.

So, if your test didn’t perform as expected, don’t just move on to the next idea blindly. Instead, take a structured approach to uncover insights, refine your testing strategy, and set up future tests for success.

Here are 9 critical questions to answer which would in turn influence your next line of action.

1. Was your hypothesis based on data or just a guess?

The foundation of any successful A/B test is a strong hypothesis. If your test failed, revisit the reason you ran it in the first place.

A weak hypothesis is often based on assumptions rather than real user behavior. The best CRO tests come from conversion research—website analytics, heatmaps, session recordings, surveys, and user testing.

Here’s a simple framework for creating a data-driven hypothesis:

“We noticed in [analytics tool] that [problem] on [page/element]. Improving this by [change] will likely result in [expected impact].”

For example:

“We noticed in Google Analytics that our checkout page has a high drop-off rate. Increasing the visibility of free shipping and returns will likely reduce exits and increase conversions.”

If your last test was based on a hunch, it’s time to start using real insights. All in one CRO tools like Growie can help by running an AI-powered website audit, analyzing visitor behavior, and identifying key friction points—so you know exactly what to test next and not spend time switching through tools.

2. Did you run the test long enough?

One of the biggest mistakes in A/B testing is declaring a result too soon.

If your test didn’t show significant results, ask yourself:

- Did you run the test for at least 7 days to account for day-to-day traffic fluctuations?

- Did you reach statistical significance (usually 95% confidence level)?

- Did any major external factors (holidays, sales, traffic spikes) affect the test?

If you stopped the test too early, the results may not be reliable. In that case, rerun the test for a longer period to get a clearer picture.

3. Were the changes noticeable enough?

A/B tests fail when the variation isn’t bold enough to make an impact.

If you only made minor tweaks—such as changing one word in a headline or adjusting a button’s color—your visitors may not have even noticed the difference.

Instead of small changes, try bigger, bolder variations such as:

- Testing a completely new layout instead of just a minor design tweak

- Rewriting entire sections of copy instead of a single line

- Changing CTA placement or size so it stands out more

If your previous test was too subtle, go bigger next time.

4. Did you analyze heatmaps and session recordings?

A failed A/B test doesn’t mean your idea was wrong—it might mean users didn’t interact with it the way you expected.

Before discarding your test, use heatmaps and session recordings to answer key questions:

- Did users even see the change? If it was below the fold or in a sidebar, they might have missed it.

- Did users interact with it? If they hovered but didn’t click, maybe the messaging was unclear.

- Were users confused? If session recordings show hesitation or backtracking, you may need to improve clarity.

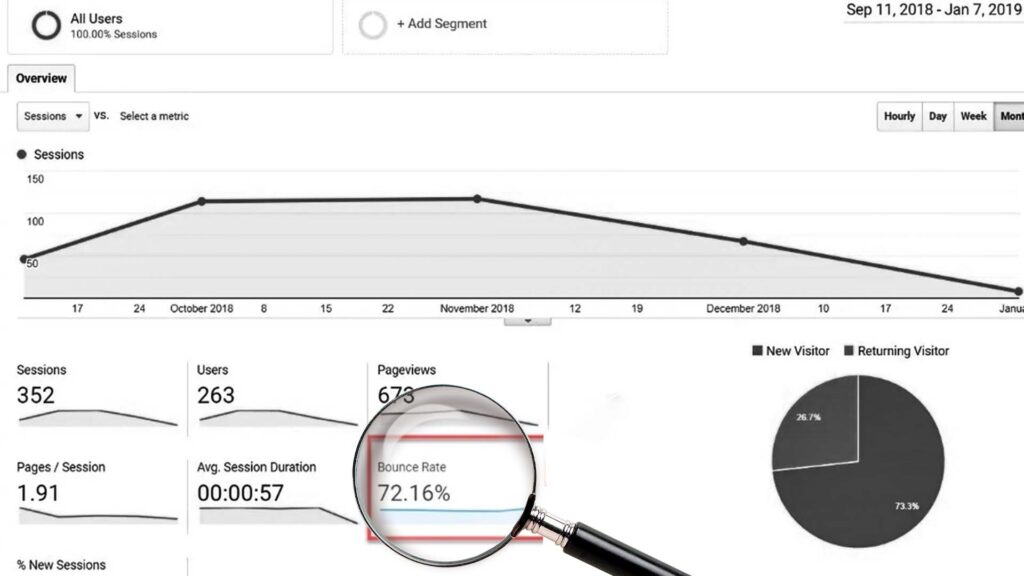

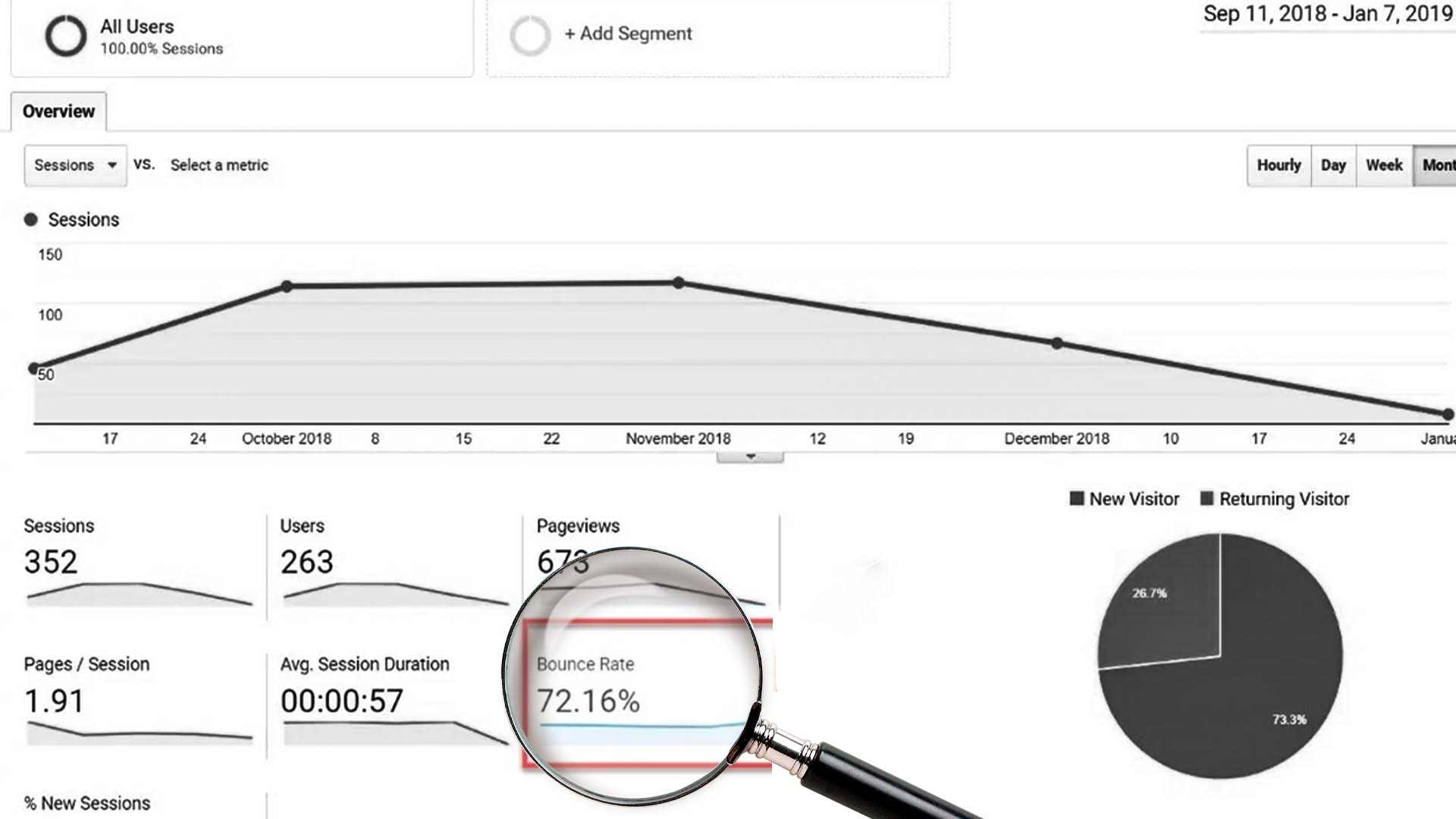

5. Did you segment your results?

Sometimes, a test doesn’t work for everyone, but it works for specific audience segments.

Instead of looking at the overall result, break it down by:

- New vs. returning visitors

- Mobile vs. desktop users

- Traffic sources (organic, paid, social, email, etc.)

For example, your new checkout flow might have hurt conversions for returning customers but increased conversions for first-time buyers.

6. How strong was the copy?

Even if your test focused on design changes, weak copy can kill conversions.

If your test involved headlines, CTAs, or product descriptions, ask yourself this:

- Did the copy clearly communicate the benefit to the user?

- Was it action-driven? (“Get Started Free” is stronger than “Learn More”)

- Did it address pain points? (“Save time with automated scheduling” is more compelling than “Easy to use”)

If your copy was generic or unclear, try improving the message before running the next test.

7. Did you optimize the entire funnel?

A common mistake is optimizing a single page in isolation.

If you tested a change on your product page but your landing page messaging was inconsistent, your test may have failed due to misalignment in the user journey.

Review previous steps in the funnel:

- Does your landing page promise one thing, but the product page says something else?

- Is the checkout page missing key trust signals (reviews, security badges)?

- Are your ad headlines and landing page copy aligned?

If your funnel isn’t cohesive, fix those issues first before running another test.

8. Did you involve the right people?

If your A/B tests are being run in isolation by one person or team, you’re missing valuable insights.

Set up a quarterly CRO review meeting with:

- Marketing team (to ensure messaging consistency)

- UX designers (to improve usability)

- Data analysts (to interpret test results)

Collaborating with different teams can help uncover blind spots and generate stronger test ideas.

9. Should you rerun the test with a tweaked variation?

Not all failed tests should be abandoned. In many cases, you can tweak and rerun the test with a slightly different approach.

Consider the following:

- Moving the tested element to a more prominent location

- Making the CTA larger or more visually striking

- Changing the offer or messaging to make it more compelling

If your initial test didn’t work, figure out why, make adjustments, and test again.

In a nutshell, a failed A/B test isn’t a failure—it’s an opportunity.

Instead of moving on to the next test blindly, analyze what went wrong, refine your approach, and use data-driven insights to improve future experiments.

With Growie’s AI-powered website audit, data analytics, and CRO Manager, you can uncover hidden opportunities, ensure better test hypotheses, and make smarter decisions that increase conversions.

Want to stop running random A/B tests and start making data-backed improvements?