No matter how much effort you put into optimizing your marketing campaigns, there’s always an element of uncertainty. You tweak your landing page, test different call-to-action buttons, and refine your email subject lines—all in the pursuit of higher conversion rates. But despite all this, you’re still playing a guessing game.

This is where A/B testing, or split testing, comes in. It’s been the go-to methodology for marketers, product managers, and growth strategists for years. The promise is simple: test two (or more) variations of a webpage, ad, or email, and let the data reveal the winner. It sounds logical, even scientific. But here’s the problem—traditional A/B testing is riddled with inefficiencies that could be quietly draining your revenue.

The fundamental issue? It’s slow. Traditional A/B testing requires a lengthy data collection phase to achieve statistical significance. That means for days, weeks, or even months, you’re splitting traffic between a potentially underperforming variant and a stronger one—leaving money on the table.

Let’s start with a scenario you’ve likely faced. Imagine running a two-week A/B test for a promotional banner on your e-commerce site. Variant A converts at 4%, Variant B at 6%. But because your test requires statistical significance (p < 0.05), you’re forced to wait 14 days before declaring a winner. During that time, half your traffic sees the weaker variant, costing you potential sales. Worse, by the time the test concludes, market conditions might have shifted, making the results irrelevant.

This isn’t hypothetical. Companies using traditional A/B testing often sacrifice short-term gains for long-term insights, assuming the trade-off is unavoidable.

So, what’s the alternative? What if you could optimize while testing—reducing losses and accelerating wins simultaneously? The answer lies in a more dynamic, adaptive approach—one that companies like Google, Netflix, and Amazon have been leveraging for years. It’s called multi-armed bandit testing, and it’s designed to maximize your revenue from day one instead of waiting weeks to declare a winner.

The stakes are higher than ever. In a world where customers expect personalized experiences, sticking with outdated testing practices means falling behind competitors who adapt in real time.

The hidden costs of traditional A/B testing

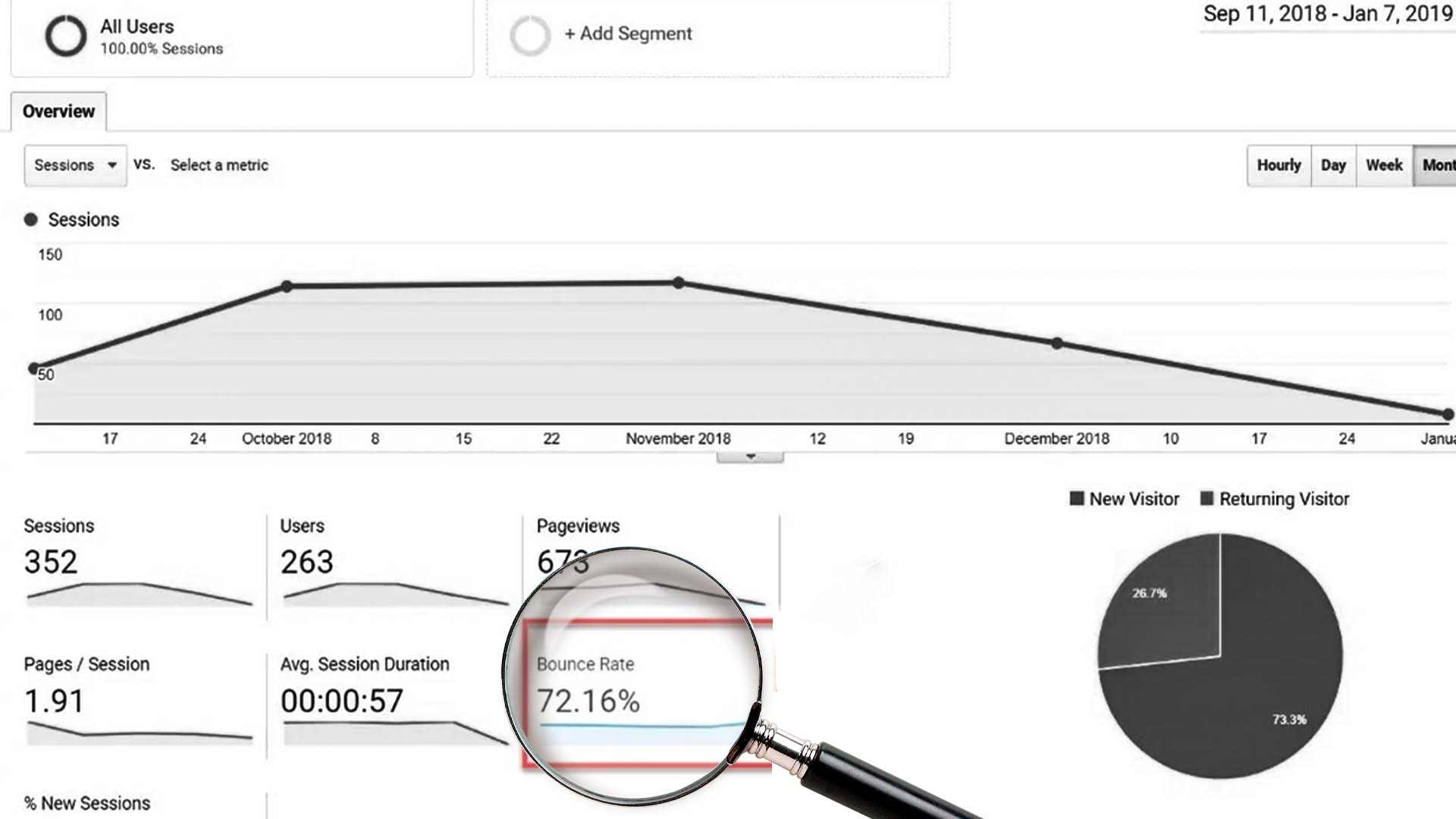

1. You’re losing revenue while waiting for statistical significance

The biggest flaw of traditional A/B testing is its rigid structure. You set up an experiment, split your traffic evenly, and then wait for enough data to reach statistical significance (typically requiring a p-value below 0.05 and a power level above 80%).

This process can take weeks, depending on traffic volume and conversion rates. During this time, half of your audience may be exposed to an inferior variation, leading to lost conversions and wasted ad spend.

Let’s put this into perspective. Imagine you’re running a flash sale and testing two different discount strategies. One is clearly outperforming the other, but because your A/B test hasn’t reached statistical significance, you continue splitting traffic evenly. By the time you confirm which one is better, the sale is almost over. The opportunity to maximize revenue has passed.

A/B testing assumes you can afford to spend a significant portion of time experimenting before reaping the rewards. That’s a luxury many businesses, especially startups and e-commerce brands, simply don’t have.

2. You’re ignoring real-time user behavior

Traditional A/B testing operates under a static model: you define the test parameters upfront, and they remain fixed throughout the experiment. But user behavior isn’t static—it fluctuates based on time of day, external events, and countless other variables.

For example, a particular variation may perform well during weekday mornings but poorly on weekends. Traditional A/B testing doesn’t adapt to these shifts, leading to misleading conclusions.

Moreover, consumer preferences evolve. The version that wins today may not be the best option next month. By the time you’ve implemented the “winning” variant, your audience’s preferences may have already changed, making your results less reliable.

3. A/B Testing struggles with multiple variants

What if you want to test three, four, or even ten different variations? Traditional A/B testing quickly becomes impractical because the more variants you introduce, the longer the test duration.

A standard A/B/n test (where “n” represents multiple variations) requires exponentially more traffic to reach statistical significance. If you’re testing five different variations, you’ll need five times the traffic compared to a simple A/B test.

This makes testing multiple creative variations nearly impossible for businesses with limited traffic or time-sensitive campaigns. As a result, many marketers settle for testing just two options—often missing out on discovering the best-performing variation.

4. The Risk of False Positives and Misinterpretation

A/B testing results are only as good as their statistical validity. But many marketers unknowingly fall into the trap of p-hacking—stopping tests early when they see a favorable trend, or running too many tests and cherry-picking results.

Even when conducted properly, A/B testing can lead to misleading conclusions. A minor fluctuation in traffic or an external factor (such as a competitor’s promotion) can skew results. Without deep statistical expertise, it’s easy to misinterpret findings and implement changes that don’t actually improve performance.

The smarter alternative: multi-armed bandit testing

How multi-armed bandits work

Instead of rigidly splitting traffic 50/50 and waiting for weeks to declare a winner, multi-armed bandit testing dynamically allocates traffic based on real-time performance.

Here’s how it works:

- Start with equal traffic distribution – Just like traditional A/B testing, a multi-armed bandit initially distributes traffic evenly across all variations.

- Learn and adapt continuously – As data comes in, the algorithm identifies which variation is performing better and gradually shifts more traffic toward it.

- Maximize conversions in real-time – Instead of waiting for statistical significance, the system constantly optimizes for revenue, minimizing the time spent on underperforming variations.

Think of it as a smart investment strategy. Instead of blindly betting on two stocks and waiting a month to see which one performs better, you invest more in the one that’s already yielding returns—while still keeping a small portion allocated to testing new opportunities.

Why multi-armed bandits outperform A/B testing

1. You’re not wasting traffic on losing variants

Traditional A/B testing forces you to sacrifice half your traffic to an underperforming variant while waiting for statistical significance. Multi-armed bandits quickly shift traffic toward the best-performing option, reducing wasted opportunities.

2. Faster results, more revenue

Because bandit algorithms continuously optimize, they start improving conversions from day one. There’s no waiting period where potential revenue is lost.

3. Handles multiple variants with ease

Testing three, five, or even ten variations? No problem. Multi-armed bandits efficiently allocate traffic among multiple variations, allowing you to test and optimize simultaneously without requiring excessive sample sizes.

4. More resilient to changing user behavior

Since bandits adjust dynamically, they can adapt when user preferences shift. If a variation that was performing well starts to decline, the system redirects traffic accordingly—ensuring you’re always maximizing revenue.

How to get started with multi-armed bandit testing

If you’re ready to move beyond traditional A/B testing, here are a few tools that support multi-armed bandit testing:

- Growie (Our all in-one free CRO tool)

- Google Optimize (now integrated into GA4)

- Optimizely

- VWO

These platforms allow you to set up bandit tests with minimal effort, making it easier than ever to optimize your campaigns dynamically.

To conclude, while traditional A/B testing has served marketers well for years, its limitations are becoming increasingly costly. The slow time-to-insight, wasted traffic, and inability to adapt to real-time user behavior make it an inefficient method for today’s fast-moving digital landscape.

Multi-armed bandit testing offers a smarter, revenue-maximizing alternative. By continuously optimizing traffic allocation, reducing wasted opportunities, and adapting to shifting user behavior, bandit testing ensures that you’re making the most of every visitor—not just after the test concludes, but from day one.

If you’re serious about conversion rate optimization, it’s time to move beyond outdated A/B testing methods and embrace a more intelligent approach. The tools are available, the data is clear, and the opportunity cost of sticking with traditional A/B testing is too high to ignore.